Our team – including Dr Bethany Waterhouse-Bradley of Ulster University – has recently created a new resource for employers to increase understanding of how algorithmic racial bias occurs in new human resources tools, and how to address it.

The toolkit – developed for the European Network Against Racism – drew on discussions with employers by ENAR’s Equal@Work Platform as well as academic and policy research on algorithmic bias. The Equal@work Platform is a space for employers, trade unions, public authorities and NGOS to collaborate for innovative solutions to diversity management. Members of the platform explore how to integrate an anti-racist approach; ensuring improved access to the workplace for people of colour and an end to structural discrimination in the labour market.

DOWNLOAD THE TOOLKIT: Artificial intelligence in HR: how to address racial biases and algorithmic discrimination in HR?

Why do we need a new toolkit for employers?

The use of algorithmic decision making can reduce time for HR professionals and hiring managers in screening large numbers of applicants, improve selection processes, and provide the potential for predictive analysis. Recruitment is the principal arena in which AI functions are being adopted. This includes targeted recruitment advertising, bulk screening of CVs and applications, providing recommendations to human decision makers on who to invite to interview and analysing candidates’ performance in selection tests and interviews.

As awareness grows of the problem of racist attitudes in recruitment – particularly with the popularity of the ‘unconscious bias’ approach and growing market of solutions to address it – many employers assume that automated decision making is more effective at reducing bias than human hiring managers.

AI is now recognised to reproduce and amplify human biases, and the particular capacity for this to exaggerate bias in HR processes is widely acknowledged as deserving of attention. Algorithms can reinforce discrimination if they focus on qualities or markers associated only with particular (already dominant) groups. While some of these markers are easily recognised (e.g. career gaps and gender), the current lack of racial diversity in workplaces across Europe makes markers of racial bias less well recognised.

Algorithms which reinforce these biases will further reduce diversity across the European labour market and reduce corporate flexibility in long-term workforce planning as well as the development of markets. In spite of awareness of these emerging problems, the scale of the potential issues is often understated, and the focus often on the technical aspects; or fixing the tools. This serves to both increase the likelihood of these effects being overlooked, and to shift focus away from the structural and institutional problems which often lead to the production of undesirable outcomes from the use of AI.

Diversity in the workforce is key to business success today. There is a direct correlation between the diversity of a workforce and the breadth of its perspective. Diverse workforces are also more productive, so employers should actively seek ways to recruit candidates into the workforce from different backgrounds.

But trust in algorithmic decision making can be decreased by poor recruitment outcomes, public accountability for bias, and errors in selection and performance functions, leading to ‘algorithmic aversion’. Building confidence amongst HR and D&I Managers that AI can reduce risks for firms in respect of discrimination and recruitment costs is a valuable service to business.

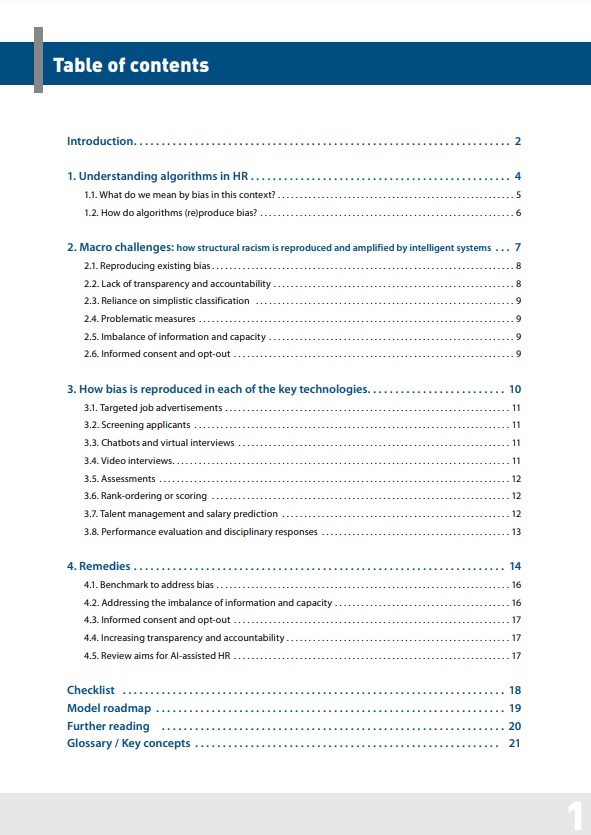

Outline of toolkit

This toolkit is designed for Human Resources and Diversity & Inclusion Managers, as well as Programmers, to ensure that consumers of off the shelf and custom AI solutions for Human Resource Management have a clear guide to challenges, solutions and good practice, in a format which supports conversations with Programmers providing solutions.

The toolkit explores the role of human bias and structural discrimination in discriminatory or unethical AI programmes, and provides clear and practical steps to ensure companies have the necessary cultural and technological tools to responsibly digitalise HR systems with the help of intelligent systems.

In doing so, HR and D&I managers will come to understand bias reproduction and amplification, and gain the confidence to address bias risks produced by inadequate or inappropriate training data, simplistic or reductive classifications or other human errors leading to biased outcomes of algorithmic decision making. Importantly, it will support HR teams in effectively transferring existing knowledge of discriminatory hiring practices and building diverse workplaces to the responsible deployment of intelligent systems to aid in those objectives.